A number of people asked about the technical aspects of the great Delicious exodus of 2010, and I've finally had some time to write it up. Note that times on all the graphs are UTC.

On December 16th Yahoo held an all-hands meeting to rally the troops after a big round of layoffs. Around 11 AM someone at this meeting showed a slide with a couple of Yahoo properties grouped into three categories, one of which was ominously called "sunset". The most prominent logo in the group belonged to Delicious, our main competitor. Milliseconds later, the slide was on the web, and there was an ominous thundering sound as every Delicious user in North America raced for the exit. [*]

I got the message just as I was starting work for the day. My Twitter client, normally a place where I might see ten or twenty daily mentions of Pinboard, had turned into a nonstop blur of updates. My inbox was making a kind of sustained pealing sound I had never heard before. It was going to be an interesting afternoon.

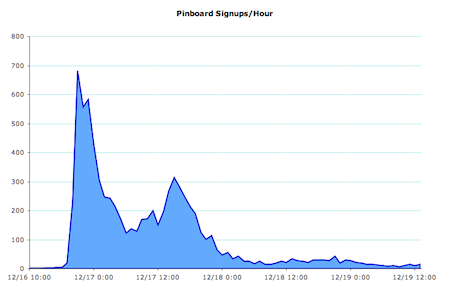

Before this moment, our relationship to Delicious had been that of a tick to an elephant. We were a niche site and in the course of eighteen months had siphoned off about six thousand users from our massive competitor, a pace I was was very happy with and hoped to sustain through 2011. But now the Senior Vice President for Bad Decisions at Yahoo had decided to give us a little help.

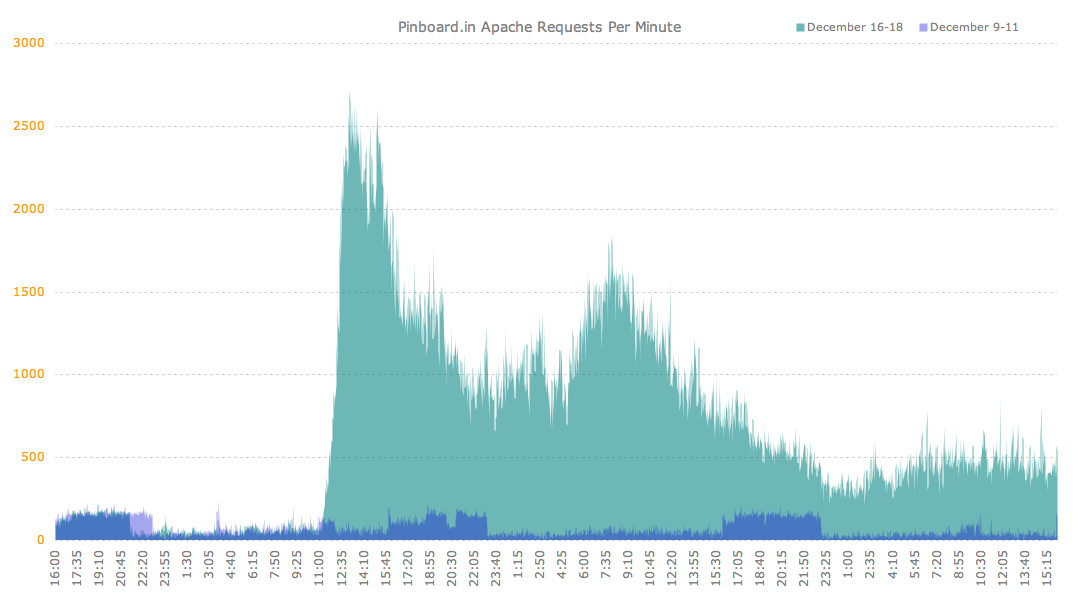

I've previously posted this graph of Pinboard web traffic on the days immediately before and after the Delicious announcement. That small blue bar at bottom shows normal traffic levels from the week before. The two teal mountain peaks correspond to midday traffic on December 16 and 17th.

My immediate response was to try to log into the server and see if there was anything I could to do keep it from falling over. Cegłowski's first law of Internet business teaches: "Never get in the way of people trying to give you money", and the quickest way to violate it would have been to crash at this key moment. To my relief, the server was still reachable and responsive. A glance at apachetop showed that web traffic was approaching 50 hits/second, or about twenty times the usual level.

This is not a lot of traffic in absolute terms, but it's more than a typical website can handle without warning. Sites like Daring Fireball or Slashdot that are notorious for crashing the objects of their attention typically only drive half this level of traffic. I was expecting to have to kill the web server, put up a static homepage, and try to ease the site back online piecemeal. But instead I benefitted from a great piece of luck.

Pinboard shares a web server with the Bedbug Registry, a kind of public forum for people fighting the pests. I started the registry in 2006 (a whole other story) and it existed in quiet obscurity until the summer of 2010, when bedbugs infested some high-profile retail stores in New York City and every media outlet in the country decided to run a bedbug story at the same time.

The Summer of Bug culminated in a September link from the CNN homepage that drove a comparable volume of traffic (about 45 hits/second) and quickly turned the server to molasses. At that point Peter spent a frantic hour reconfiguring Apache and installing pound in order to safely absorb the attention. Neither of us realized we had just stress-tested Pinboard for the demise of Delicious three months later. [**]

Thanks to this, the Pinboard web server ran like a champ throughout the Delicious exodus even as other parts of the service came under heavy strain. We were able to keep our median page display times under a third of a second through the worst of the Yahoo traffic, while a number of other sites (and even the Delicious blog!) went down. This gave us terrific word-of-mouth later.

Of course, had bedbugs been found in the Delicious offices, our server would have been doomed.

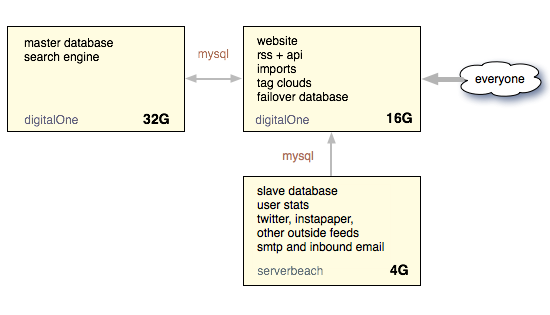

Another piece of good luck was that I had overprovisioned Pinboard with hardware, basically due to my great laziness. Here's what our network setup looked like on that fateful day:

As you can see, we had a big web server connected to an even bigger database server, with a more modest third machine in charge of background tasks.

It has become accepted practice in web app development to design in layers of application caching from the outset. This is especially true in the world of Rails and other frameworks, where there is a tendency to treat one's app like a high-level character in a role-playing game, equipping it with epic gems, sinatras, capistranos, and other mithril armor into a mighty "application stack".

I had just come out of Rails consulting when I started Pinboard and really wanted to avoid this kind of overengineering, capitalizing instead on the fact that it was 2010 and a sufficiently simple website could run ridiculously fast with no caching if you just threw hardware at it. After trying a number of hosting providers I found Digital One, a small Swiss company that rented out HP blade servers with prodigious (at least by web hosting standards) quantities of RAM. This meant that our two thousand active users were completely swallowed up within a vast, cathedral-like database server.

If you offer MySQL this kind of room, your data is just going to climb in there and laugh at you no matter what kind of traffic it gets. Since Pinboard is not much more than a thin wrapper around some carefully tuned database queries, users and visitors could page through bookmarks to their hearts' content without the server even noticing they were there. That was the good news.

The bad news was that it had never occurred to me to test the database under write load.

Now, I can see the beardos out there shaking their heads. But in my defense, heavy write loads seemed like the last thing Pinboard would ever face. It was my experience that people approached an online purchase of six dollars with the same deliberation and thoughtfulness they might bring to bear when buying a new car. Prospective users would hand-wring for weeks on Twitter and send us closely-worded, punctilious lists of questions before creating an account.

The idea that we might someday have to worry about write throughput never occurred to me. If it had, I would have thought it a symptom of nascent megalomania. But now we were seeing this:

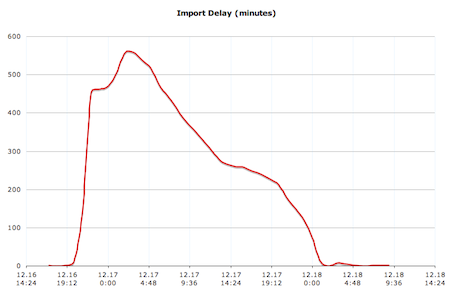

There were thousands of new users, and each arrived clutching an export file brimming with precious bookmarks. Within a half hour of the onslaught, I saw that imports were backing up badly, the database was using all available I/O for writes, and the two MySQL slaves were falling steadily behind. Try as I might, I could not get the imports to go through faster. By relational database standards, 80 bookmark writes per second should have been a quiet stroll through a fragrant meadow, but something was clearly badly broken.

To buy a little time, I turned off every non-essential service that wrote anything to the database. That meant no more tag clouds, no bookmark counts, no pulling bookmarks from Instapaper or Twitter, and no popular page. It also meant disabling the search indexer, which shared the same physical disk.

Much later we would learn that the problem was in the tags table. In the early days of Pinboard, I had set it up like this:

create table tags tag char(255), bookmark_id int, .... unique index (tag, bookmark_id) charset=utf8

Notice the fixed-length char instead of the saner variable-length varchar. This meant MySQL had to use 765 bytes per tag just for that one field, no matter how short the actual tag was[***]. I'm sure what was going through my head was something like 'fixed-width rows will make it faster to query this table. Now how about another beer!'.

Having made this brilliant design decision, I so thoroughly forgot about it that in later days I was never able to figure out why our tags table took forever to load from backup. The indexes all seemed sane, and yet it took ages to re-generate the table. But of course what had happened was the table had swollen to monstruous size, sprawling over 80 GB of disk space. Adding to this table and updating its bloated index consumed three quarters of the write time for every bookmark.

Had I realized this that fateful afternoon, I might have tried making some more radical changes while my mind was still fresh. But at that moment the full magnitude of what we were dealing with hadn't become clear. We had had big spikes in attention before (thanks @gruber and @leolaporte!), and they usually faded quickly after a couple of hours. So I focused my efforts on answering support requests. Brad DeLong, the great economics blogger, was kind enough to collect and publish our tweet stream from that day for posterity.

We had always prided ourself on being a minimalist website. But the experience for new users now verged on Zen-like. After paying the signup fee, a new user would upload her delicious bookmarks, see a message that the upload was pending, and... that was it. It was possible to add bookmarks by hand, but there was no tag cloud, no tag auto-completion, no suggested tags for URLs, the aggregate bookmark counts on the profile page were all wrong, and there was no way to search bookmarks less than a day old. This was a lot to ask of people who were already skittish about online bookmarking. A lot of my time was spent reassuring new users that their data was safe and that their money was not winging its way to the Cayman Islands.

At seven PM Diane ran out for a bottle of champagne and we gave ourselves ten minutes to celebrate. Here I am watching three hundred new emails arrive in my mailbox.

To add spice to the evening, our outbound mail server had now started to crash. Each crash required opening a support ticket and waiting for someone in the datacenter to reboot the machine. Whenever this happened, activation emails would queue up and new users would be unable to log in until the machine came back online. This diverting task occupied me until midnight, at which point I had been typing nearly nonstop for eleven straight hours and had lost about fifty IQ points. And imports were still taking longer and longer; at this point over six hours.

Pinboard has a three-day trial period, and I was now having nightmare visions of spending the next ten days sitting in front of the abysmally slow PayPal site, clicking the 'refund' button and sniffling into a hankie.

My hope had been that we could start to catch up after California midnight, when web traffic usually dies down to a trickle. But of course now Europe was waking up to the Yahoo news and panicking in turn. There was no real let-up, just the steady drumbeat of new import files. At our worst we fell about ten hours behind with imports, and my wrists burned from typing reassuring emails to nervous new customers explaining that their bookmarks, would, in the fullness of time, actually show up on the big blank spot that was their homepage. We added something like six million bookmarks in the first 24 hours (doubling what we had collected in the first year and a half of running the site), another 2.5 million the following day, and a cumulative ten million new bookmarks in that first week.

This graph shows the average expected time in minutes users had to wait after uploading their stuff:

It wasn't until dawn that the import lag started to decrease. It was now Friday immediately before Christmas week, and I felt if we could steer the site safely into the weekend we would get some breathing room. Saturday night would be the perfect time to run the expensive ALTER TABLE statement that would fix the tags issue. Comforted by this thought I went out for a run (because why not?), and then dived into bed with iron instructions to be awakened ninety minutes later, no matter how much I cried.

Fully refreshed, I could turn my attention to the next pair of crises: tag clouds and archiving.

Tag clouds on Pinboard are a simple UI element that shows the top 200 or so tags you've used on the right side of your home page. Since I had turned off the script that made the clouds, new users were apprehensive that their tags had not imported properly.

Up to this point I had generated tag clouds by running a SQL query that grouped all a user's tags together and stored the counts in a summary table. Anytime a user added or edited a bookmark they got thrown on a queue, and a script lurking in the background regenerated their tag counts from scratch. This query was fairly expensive, but under minimal load it didn't matter.

Of course, we weren't under minimal load anymore. The obvious fix was to calculate the top tags in code and only update the few counts that had changed. But coding this correctly was surprisingly difficult. The experience of programming on so little sleep was like trying to cook a soufflé by dictating instructions over a phone to someone who had never been in a kitchen before. It took several rounds of rewrites to get the simple tag cloud script right, and this made me very skittish about touching any other parts of the code over the next few days, even when the fixes were easy and obvious. The part of my brain that knew what to do no longer seemed to be connected directly to my hands.

The second crisis was more serious. For an an extra fee, Pinboard offers archival accounts, where the site crawls and caches a copy of every bookmark in your account. New users have the option of signing up for archiving from the start, or upgrading to it later. A large number of recent arrivals had chosen the first option, which meant that we had a backlog of about two million bookmarks to crawl and index for full-text search. It also meant we had a significant group of new users who had paid extra for a feature they couldn't evaluate.[****]

Like many other parts of the service, the crawler was set up to run in one process per server. It was imperative to rewrite the crawler script so that multiple instances could run in parallel on each machine, and then set up an EC2 image so that we chew through the backlog even faster. The EC2 bill for December came to over $600, but all the bookmarks were crawled by Tuesday, and my nightmare of endless refund requests didn't materialize.

On Monday our newly provisioned server came on line. Figuring that overkill had served me well so far, this one had 64 GB of memory and acres of disk space. On Tuesday morning I was invited to appear on net@night with Leo Laporte and Amber MacArthur, who were both terrifically encouraging. At this point I could barely remember my own name. And then, mercifully, it was Christmas, and everyone got offline for a while.

In these writeups it's traditional to talk about LESSONS LEARNED, which is something I feel equivocal about. There's a lot I would have done differently knowing what was coming, but the whole thing about unexpected events is that you don't expect them. Most of the decisions that caused me pain (like never taking the time to parallelize background tasks) were sensible trade-offs at the time I made them, since they allowed me to spend time on something else. So here I'll focus on the things that were unequivocally wrong:

Too many tasks required typing into a live database

It is terrifying and you are very tired. At the outset Peter and I had to do live SQL queries to find user accounts, fix names, emails, and logins, and do other housekeeping tasks. I lived in constant fear of forgetting a WHERE clause.

We had no public status page

I could have avoided a very large volume of email correspondence by having a status page to point to that told people what services were running and which were temporarily disabled.

I assumed slaves would be within a few minutes of the master

There were multiple places in my code where I queried a slave and updated the master. This only works if you don't care about being many hours out of date. For example, it would have been fine for the popular page, but was not acceptable for bookmark counts.

There were also some things that went well:

We used dedicated hardware

To quote a famous businessman: "It costs money. It costs money because it saves money".

We charged money for a good or service

I know this one is controversial, but there are enormous benefits and you can immediately reinvest a whole bunch of it in your project *sips daiquiri*. Your customers will appreciate that you have a long-term plan that doesn't involve repackaging them as a product.

If Pinboard were not a paid service, we could not have stayed up on December 16, and I would have been forced to either seek outside funding or close signups. Instead, I was immediately able to hire contractors, add hardware, and put money in the bank against further development.

I don't claim the paid model is right for all projects that want to stay small and independent. But given the terrible track record of free bookmarking sites in particular, the fact that a Pinboard account costs money actually increases its perceived value. People don't want their bookmarks to go away, and they hate switching services. A sustainable, credible business model is a big feature.

So that's the story of our big Yahoo adventure - ten million bookmarks, eleven thousand new users, forty-odd refunds, and about a terabyte of newly-crawled data. To everyone who signed up in the thick of things, thank you for your terrific patience, and for being so understanding as we worked to get the site back on its feet.

And a final, special shout-out goes to my favorite company in the world, Yahoo. I can't wait to see what you guys think of next!

* The list also included the mysteriously indestructible Yahoo Bookmarks, though that didn't seem to affect anyone. How Yahoo Bookmarks has persisted into 2011 remains one of the great unsolved mysteries of computer science.

** I should point out that Yahoo claims Delicious is alive and well, and will bounce back better than ever just as soon as they can find someone — anyone — to please buy it. Since the entire staff has been fired and the project is a ghost ship, I'm going to stick with 'demise'.

*** This is because utf8 strings in MySQL can be up to three bytes per character, and MySQL has to assume the worst in sizing the row.

**** I ended up extending the refund window by seven days and giving everyone a free extra week of archiving.

—maciej on March 08, 2011

Pinboard is a bookmarking site and personal archive with an emphasis on speed over socializing.

This is the Pinboard developer blog, where I announce features and share news.

How To Reach Help

Send bug reports to bugs@pinboard.in

Talk to me on Twitter

Post to the discussion group at pinboard-dev

Or find me on IRC: #pinboard at freenode.net